Embeddings and Vectors in AI

In AI speak, vectors describe the general meaning of a sentence, like gps coordinates describe the location of a place on earth. As with GPS coordinates, vectors consists of numbers. How does it work in machine learning?

We can use math to calculate the distance between two sets of lattitude and longitude cordinates and know from that calculation, how far apart the places are. It’s the same way with data that is prepared within AI databases. If we create an embedding for the sentence “I feel great” – which is simply the process of converting data (like a sentence) into a meaningful numerical representation, we get a vector (the collection of numbers) that may look like this:[

0.008571666, -0.034790553, -0.042013984, 0.024244195,

0.0059016035, -0.017718265, 0.03698748, 0.010465656,

0.005014277, 0.053828552, 0.01634891, -0.0025038158,

0.011476494, -0.024566405, -0.03616831, -0.048698016,

-0.022726284, -0.081891805, -0.038213458, 0.06429547,

…

]

but it will be much larger. This is a vector, just like GPS coordinates, but more complex. The vector, again, just like GPS coordinates, points somewhere. Not to a place on our planet, but to a location that assigns a “meaning” as the AI understands it.

Let’s create another vector for a different sentence: “I am very hungry”. Again, we get a set of numbers, a vector.[

-0.00018228134, -0.0043588853, -0.038345303, 0.024235666, -0.04877074,

-0.024922315, 0.043089297, 0.034793653, 0.0315717, 0.0028287298,

0.033876095, -0.0032970305, 0.007692303, -0.047427267, 0.0048615676,

-0.055078086, -0.047022387, -0.05258191, -0.06273587, 0.07900981,

0.009487534, 0.03581712, -0.04313395, -0.013954903, 0.00004682049,

0.02608665, -0.0055042687, 0.0076092384, 0.031084672, 0.05447333,

…

]

This vector also points to a “meaning” within the AI. We can now use math again to compare the two vectors. Just to make it clear: We do not simply compare the words to find similarity, like “How similar are the two sentences in a lexigraphical sense”, we are actually comparing the “meaning” of those sentences.

So – if both vectors are “pointing” to a meaning within an AI database, we can actually use math to find out, if both vectors point to the same area of the AI “meaning” center – or not. For this we use cosine similarity. We could use different mathematical functions (e.g. Euclidean distance), but they would pretty much yield the same result and since pretty much everybody else uses cosine similarity, we’ll use it too.

Explainer: https://tomhazledine.com/cosine-similarity/

OK, how close is the meaning of “I feel great” to “I am hungry” ?

Using the “mxbai-embed-large” AI model, we are seeing something around 0.60, which is not close. If both sentences would be identical, we would see a 1.0. In our test case, the vectors are only very vaguely correlated (both are feelings after all), but they don’t have the same meaning – according to the AI. If we use something even more different, say “My dog is crazy” we get a similarity score of 0.44 – the vectors really point in different directions. Now – let’s look at something closer to “I feel great”. What about “I feel amazing” ? It scores at 0.92 – very close. “I am happy” scores 0.85 which also is really close to the first sentence. The same with “I am exhilarated” – even “I am very healthy” still scores above 0.7 .. so there’s a lot of similarity.

We can use this technique to compare almost anything, texts, images, audios. We could use documents, break them down in to chunks and compare the individual chunks from both documents with each other to get a score of similarity. This is being used to find plagiarists, but also to compare input data (a question) with the content of a document.

Finally: We need to AI to create our embedding and vectors. And like everything else, those vectors are just as good as the AI being used. I found that the models “mxbai-embed-large” or “nomic-embed-text” are not really good as the results are skewed towards positive values which suggests similarities that do not exists. Why? Who knows. So for now, I will be using “all-minilm” (all models available via ollama.com) Here are examples for the models I tested:

Test sentence 1: The sun is shining.

Test sentence 2: The car is blue.

all-minilm: 0.19070

mbxai-embed-large: 0.4122

nomic-embed-text: 0.527577

Test sentence 1: I am hungry.

Test sentence 2: I need food.

all-minilm: 0.70641

mbxai-embed-large: 0.82909

nomic-embed-text: 0.86591

Test sentence 1: I am a doctor.

Test sentence 2: I am a physician.

all-minilm: 0.91803

mbxai-embed-large: 0.96768

nomic-embed-text: 0.93100

As one can see, the spread of similarities is much smaller with “mbxai-embed-large” and “nomic-embed-text” with relatively high numbers even for sentences that are not related at all. There is a very in-depth and interesting discussion in regard to this topic on the “OpenAI Developer Community Forum”.

https://community.openai.com/t/embeddings-and-cosine-similarity/17761/13

Check it out if you want a deep-dive into this problem.

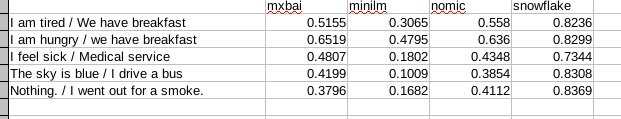

Some more testing with available “ollama” embedding models reveal the difficulties of selecting the right model, as they all are .. well .. not really good.

As an example: How can snowflake assign a 0.8x similarity to “Nothing” vs. “I went out for a smoke” ? Why do all models fail to see the (relative) similarity between “I feel sick” and “Medical service” ? As those models are usually the backbone of RAG systems, it’s unfortunately clear why most RAG environments are struggling to produce meaningful results.

Michaela Merz is an entrepreneur and first generation hacker. Her career started even before the Internet was available. She invented and developed a number of technologies now considered to be standard in modern web-environments. She is a software engineer, a Wilderness Rescue volunteer, an Advanced Emergency Medical Technician, a FAA Part 61 (PPL , IFR) , Part 107 certified UAS pilot and a licensed ham . More about Michaela ..